Introduction

Web scraping is an essential technique for data scientists and analysts who need to extract data from websites for analysis or research. In this tutorial, you’ll learn how to use rvest, a popular R package, to parse HTML, extract data, and handle common web scraping challenges. We will start with basic examples and then expand into more advanced topics like pagination, using sessions, and error handling.

Setting Up

Before you begin, ensure that the rvest package is installed and loaded:

#| label: install-rvest

# Install the package

install.packages("rvest")

# Load the package

library(rvest)Basic Web Scraping Example

Let’s start with a simple example: fetching a webpage and extracting its title.

#| label: basic-scraping

# Define the URL to scrape

url <- "https://www.worldometers.info/world-population/population-by-country/"

# Read the HTML content from the URL

page <- read_html(url)

# Extract the page title using a CSS selector

page_title <- page %>% html_node("title") %>% html_text()

print(paste("Page Title:", page_title))Results:

[1] "Page Title: Population by Country (2025) - Worldometer"Extracting Links and Text

You can extract hyperlinks and their text from a webpage:

#| label: extract-links

# Extract all hyperlink nodes

links <- page %>% html_nodes("a")

# Extract text and URLs for the first 5 links

for (i in 1:min(5, length(links))) {

link_text <- links[i] %>% html_text(trim = TRUE)

link_href <- links[i] %>% html_attr("href")

print(paste("Link Text:", link_text, "- URL:", link_href))

}Results:

[1] "Link Text: - URL: /"

[1] "Link Text: Population - URL: /population/"

[1] "Link Text: CO2 emissions - URL: /co2-emissions/"

[1] "Link Text: Coronavirus - URL: /coronavirus/"

[1] "Link Text: Countries - URL: /geography/countries-of-the-world/"Extracting Tables

Many websites display data in tables. Use rvest to extract and convert tables into data frames:

#| label: extract-tables

# Find the first table on the page

table_node <- page %>% html_node("table")

# Convert the table to a data frame

table_data <- table_node %>% html_table(fill = TRUE)

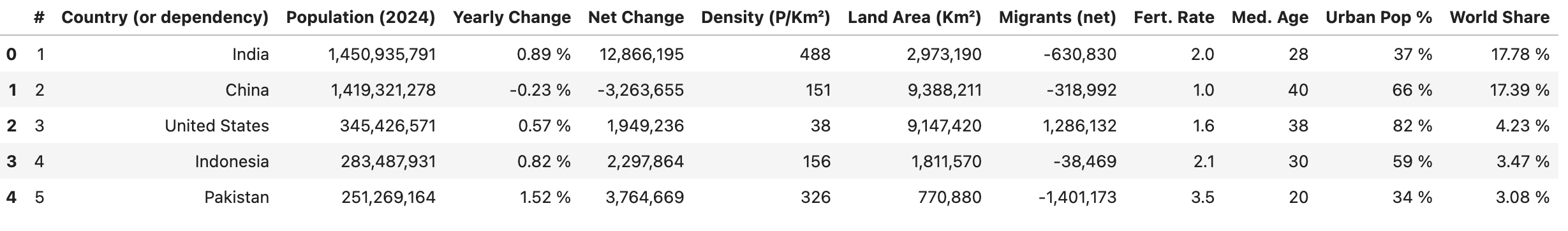

print(head(table_data))Results:

Handling Pagination

For websites that split data across multiple pages, you can automate pagination. The following code extracts blog post titles and URLs from a paginated website by iterating through a set of page numbers, then combines the results into a data frame.

#| label: pagination-example

library(rvest)

library(dplyr)

library(purrr)

library(tibble)

# Define a function to scrape a page given its URL

scrape_page <- function(url) {

tryCatch({

read_html(url)

}, error = function(e) {

message("Error accessing URL: ", url)

return(NULL)

})

}

# The website uses a query parameter `#listing-listing-page=` for pagination

base_url <- "https://quarto.org/docs/blog/#listing-listing-page="

page_numbers <- 1:2 # Example: scrape first 2 pages

# Initialize an empty tibble to store posts

all_posts <- tibble(title = character(), url = character())

# Loop over each page number

for (page_number in page_numbers) {

url <- paste0(base_url, page_number)

page <- scrape_page(url)

if (!is.null(page)) {

# Extract blog posts: each title and URL is within an <h3> tag with class 'no-anchor listing-title'

posts <- page %>%

html_nodes("h3.no-anchor.listing-title") %>%

map_df(function(h3) {

a_tag <- h3 %>% html_node("a")

if (!is.null(a_tag)) {

title <- a_tag %>% html_text(trim = TRUE)

link <- a_tag %>% html_attr("href")

tibble(title = title, url = link)

} else {

tibble(title = NA_character_, url = NA_character_)

}

})

# Append the posts from this page to the overall list

all_posts <- bind_rows(all_posts, posts)

}

# Respectful delay between requests

Sys.sleep(1)

}

# Display the first few rows of the collected posts

print(head(all_posts))

Using Sessions

Using sessions can help maintain state (e.g., cookies and headers) across multiple requests, improving efficiency when scraping multiple pages from the same site.

#| label: using-sessions

url <- "https://www.worldometers.info/world-population/population-by-country/"

# Create a session object

session <- session("https://www.worldometers.info")

# Use the session to navigate and scrape

page <- session %>% session_jump_to(url)

page_title <- page %>% read_html() %>% html_node("title") %>% html_text()

print(paste("Session-based Page Title:", page_title))Error Handling

Integrating error handling ensures that your script can handle unexpected issues gracefully.

#| label: error-handling

# Use tryCatch to handle errors during scraping

safe_scrape <- function(url) {

tryCatch({

read_html(url)

}, error = function(e) {

message("Error: ", e$message)

return(NULL)

})

}

page <- safe_scrape("https://example.com/nonexistent")

if (is.null(page)) {

print("Failed to retrieve the page. Please check the URL or try again later.")

}Results:

Error: HTTP error 404.

[1] "Failed to retrieve the page. Please check the URL or try again later."Best Practices for Web Scraping

- Respect Website Policies:

Always check the website’srobots.txtfile and terms of service to ensure compliance with their scraping policies. - Implement Rate Limiting:

Use delays (e.g.,Sys.sleep()) between requests to avoid overwhelming the server. - Monitor for Changes:

Websites can change their structure over time. Regularly update your selectors and error handling to accommodate these changes. - Document Your Code:

Comment your scripts and structure them clearly for easy maintenance and reproducibility.

Conclusion

Expanding on the basics, this tutorial covers advanced techniques for web scraping with rvest, including handling pagination, using sessions, and implementing error handling. With these tools and best practices, you can build robust data extraction workflows for your data science projects.

Further Reading

Happy coding, and enjoy exploring the web with rvest!

Explore More Articles

Here are more articles from the same category to help you dive deeper into the topic.

Reuse

Citation

@online{kassambara2024,

author = {Kassambara, Alboukadel},

title = {Web {Scraping} with Rvest},

date = {2024-02-10},

url = {https://www.datanovia.com/learn/programming/r/tools/web-scraping-with-rvest.html},

langid = {en}

}